How CERN Is Using Linux and Open Source — Linux.com

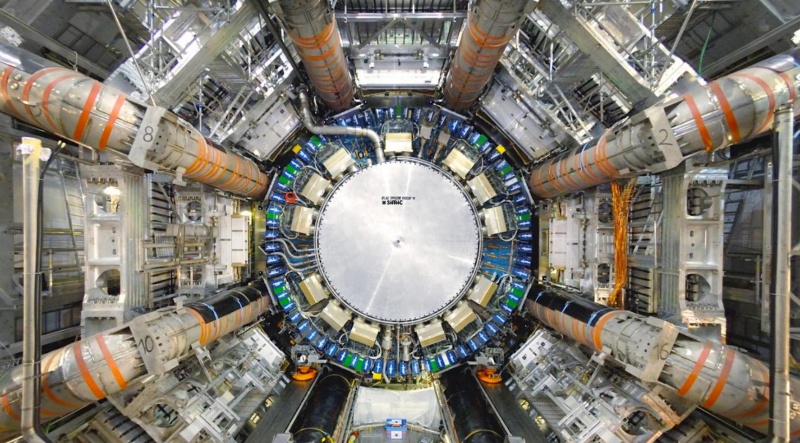

CERN relies on open source technology to handle huge amounts of data generated by the Large Hadron Collider. The ATLAS (shown here) is a general-purpose detector that probes for fundamental particles. (Image courtesy: CERN)

CERN really needs no introduction. Among other things, the European Organization for Nuclear Research created the World Wide Web and the Large Hadron Collider (LHC), the world’s largest particle accelerator, which was used in discovery of the Higgs boson. Tim Bell, who is responsible for the organization’s IT Operating Systems and Infrastructure group, says the goal of his team is “to provide the compute facility for 13,000 physicists around the world to analyze those collisions, understand what the universe is made of and how it works.”

CERN is conducting hardcore science, especially with the LHC, which generates massive amounts of data when it’s operational. “CERN currently stores about 200 petabytes of data, with over 10 petabytes of data coming in each month when the accelerator is running. This certainly produces extreme challenges for the computing infrastructure, regarding storing this large amount of data, as well as the having the capability to process it in a reasonable timeframe. It puts pressure on the networking and storage technologies and the ability to deliver an efficient compute framework,” Bell said.

The scale at which LHC operates and the amount of data it generates pose some serious challenges. But CERN is not new to such problems. Founded in 1954, CERN has been around for about 60 years. “We’ve always been facing computing challenges that are difficult problems to solve, but we have been working with open source communities to solve them,” Bell said. “Even in the 90s, when we invented the World Wide Web, we were looking to share this with the rest of humanity in order to be able to benefit from the research done at CERN and open source was the right vehicle to do that.”

Using OpenStack and CentOS

Today, CERN is a heavy user of OpenStack, and Bell is one of the Board Members of the OpenStack Foundation. But CERN predates OpenStack. For several years, they have been using various open source technologies to deliver services through Linux servers.

“Over the past 10 years, we’ve found that rather than taking our problems ourselves, we find upstream open source communities with which we can work, who are facing similar challenges and then we contribute to those projects rather than inventing everything ourselves and then having to maintain it as well,” said Bell.

A good example is Linux itself. CERN used to be a Red Hat Enterprise Linux customer. But, back in 2004, they worked with Fermilab to build their own Linux distribution called Scientific Linux. Eventually they realized that, because they were not modifying the kernel, there was no point in spending time spinning up their own distribution; so they migrated to CentOS. Because CentOS is a fully open source and community driven project, CERN could collaborate with the project and contribute to how CentOS is built and distributed.

CERN helps CentOS with infrastructure and they also organize CentOS DoJo at CERN where engineers can get together to improve the CentOS packaging.

In addition to OpenStack and CentOS, CERN is a heavy user of other open source projects, including Puppet for configuration management, Grafana and influxDB for monitoring, and is involved in many more.

“We collaborate with around 170 labs around the world. So every time that we find an improvement in an open source project, other labs can easily take that and use it,” said Bell, “At the same time, we also learn from others. When large scale installations like eBay and Rackspace make changes to improve scalability of solutions, it benefits us and allows us to scale.”

Solving realistic problems

Around 2012, CERN was looking at ways to scale computing for the LHC, but the challenge was people rather than technology. The number of staff that CERN employs is fixed. “We had to find ways in which we can scale the compute without requiring a large number of additional people in order to administer that,” Bell said. “OpenStack provided us with an automated API-driven, software-defined infrastructure.” OpenStack also allowed CERN to look at problems related to the delivery of services and then automate those, without having to scale the staff.

“We’re currently running about 280,000 cores and 7,000 servers across two data centers in Geneva and in Budapest. We are using software-defined infrastructure to automate everything, which allows us to continue to add additional servers while remaining within the same envelope of staff,” said Bell.

As time progresses, CERN will be dealing with even bigger challenges. Large Hadron Collider has a roadmap out to 2035, including a number of significant upgrades. “We run the accelerator for three to four years and then have a period of 18 months or two years when we upgrade the infrastructure. This maintenance period allows us to also do some computing planning,” said Bell. CERN is also planning High Luminosity Large Hadron Collider upgrade, which will allow for beams with higher luminosity. The upgrade would mean about 60 times more compute requirement compared to what CERN has today.

“With Moore’s Law, we will maybe get one quarter of the way there, so we have to find ways under which we can be scaling the compute and the storage infrastructure correspondingly and finding automation and solutions such as OpenStack will help that,” said Bell.

“When we started off the large Hadron collider and looked at how we would deliver the computing, it was clear that we couldn’t put everything into the data center at CERN, so we devised a distributed grid structure, with tier zero at CERN and then a cascading structure around that,” said Bell. “There are around 12 large tier one centers and then 150 small universities and labs around the world. They receive samples at the data from the LHC in order to assist the physicists to understand and analyze the data.”

That structure means CERN is collaborating internationally, with hundreds of countries contributing toward the analysis of that data. It boils down to the fundamental principle that open source is not just about sharing code, it’s about collaboration among people to share knowledge and achieve what no single individual, organization, or company can achieve alone. That’s the Higgs boson of the open source world.

Source: https://www.linux.com/blog/2018/5/how-cern-using-linux-open-source